Understanding Cybersecurity Metrics and Analytics

Tracking the right cybersecurity metrics transforms security from "we think we're protected" to "we can prove we're reducing risk."

Tracking the right cybersecurity metrics transforms security from "we think we're protected" to "we can prove we're reducing risk."

Cybersecurity metrics transform subjective security assessments into measurable outcomes. Organizations tracking the right metrics—vulnerability management MTTR (74 days average for critical findings), cloud attack surface reduction velocity, security analytics performance, and least privilege effectiveness—detect threats 2× faster and save $2.2 million on breach costs. The difference between security theater and actual risk reduction comes down to measuring outcomes that matter: remediation velocity, exposure reduction, and proactive prevention, not vanity metrics like alert counts or vulnerability totals.

The CISO stands at the whiteboard during the quarterly business review. "Are we more secure than we were six months ago?" the CFO asks. The CISO points to deployment statistics: three new security tools implemented, 2,847 vulnerabilities patched, 94,000 suspicious login attempts blocked. "So... yes?" the CFO presses. The CISO pauses. "I think so."

That pause is the gap between data and insight. Security teams collect massive amounts of data—vulnerability scans, SIEM logs, access reviews, cloud configuration checks—but struggle to answer simple questions about whether security is improving or degrading.

According to IBM's Cost of a Data Breach Report, organizations that effectively measure security outcomes save an average of $2.2 million on breach costs and detect incidents 2x faster than those relying on gut feel. The difference between security theater and measurable risk reduction comes down to tracking the right cybersecurity metrics—not just collecting data, but measuring outcomes that prove you're getting more Secure.com.

Discovery metrics show whether your visibility is complete or full of blind spots. Asset coverage indicates whether all systems are being scanned. Many teams uncover major gaps once cloud infrastructure and shadow IT are included.

Tracking newly discovered assets outside your inventory highlights what you’re missing, while consistent scan schedules ensure systems don’t quietly fall out of scope.

Prioritization metrics reveal whether effort is focused on the vulnerabilities that matter most. Large backlogs of critical vulnerabilities often signal overload or ineffective prioritization.

Contextual risk scoring

It improves focus by combining CVSS severity with exploitability (active exploitation or proof-of-concept availability) and business impact (asset criticality and data sensitivity). This helps teams address vulnerabilities with the highest real-world risk.

Remediation metrics show whether fixes are happening quickly enough.

Mean Time to Remediate (MTTR) by severity is especially important—shorter timelines significantly reduce exposure and breach risk.

Tracking vulnerabilities closed over time, and SLA compliance shows whether remediation efforts are keeping up with new findings.

Verification closes the loop.

Re-scanning confirms vulnerabilities were successfully fixed, while re-opened issues point to failed patches or configuration drift.

Long-term trends matter more than snapshots. Falling MTTR, improving SLA compliance, and steady quarter-over-quarter progress indicate a vulnerability management program that’s getting stronger—not just busier.

Measuring cloud attack surface management isn’t about how many issues you uncover—it’s about whether risk is actually going down. Many teams confuse discovery with progress, but if exposures aren’t being fixed, the attack surface continues to grow.

Start with visibility. You should understand how complete your asset inventory is, how often new or unknown assets appear, and how quickly new cloud resources are detected after creation.

Effective discovery happens in minutes, not hours, and spans all cloud platforms and SaaS environments—not just your primary cloud account.

Next, focus on exposure. Track how many assets are publicly accessible and, more importantly, how many pose real risk—such as exposed databases, unencrypted storage buckets, or internet-facing administrative interfaces.

Measure how long internet-facing vulnerabilities remain open and how easily attackers could reach critical systems. Shorter exposure windows and fewer attack paths translate directly to lower risk.

Prioritization is just as critical as discovery. High-risk findings should align with real-world incidents or near-misses. If analysts spend time chasing alerts that prove harmless, your detection and prioritization logic needs tuning.

The goal is fewer alerts, higher confidence, and faster action.

Finally, measure remediation. If you’re discovering more exposures each month than you’re fixing, your attack surface is expanding. Track how quickly critical issues are resolved and how consistently fixes meet defined timeframes.

The strongest programs resolve most serious exposures before attackers ever exploit them.

At the executive level, trends matter most. Is the overall attack surface shrinking? Are misconfigurations detected quickly? And most importantly, are risks being fixed before they show up in threat intelligence, or only after?

Teams that act before exposure turns into exploitation are staying ahead, not constantly catching up.

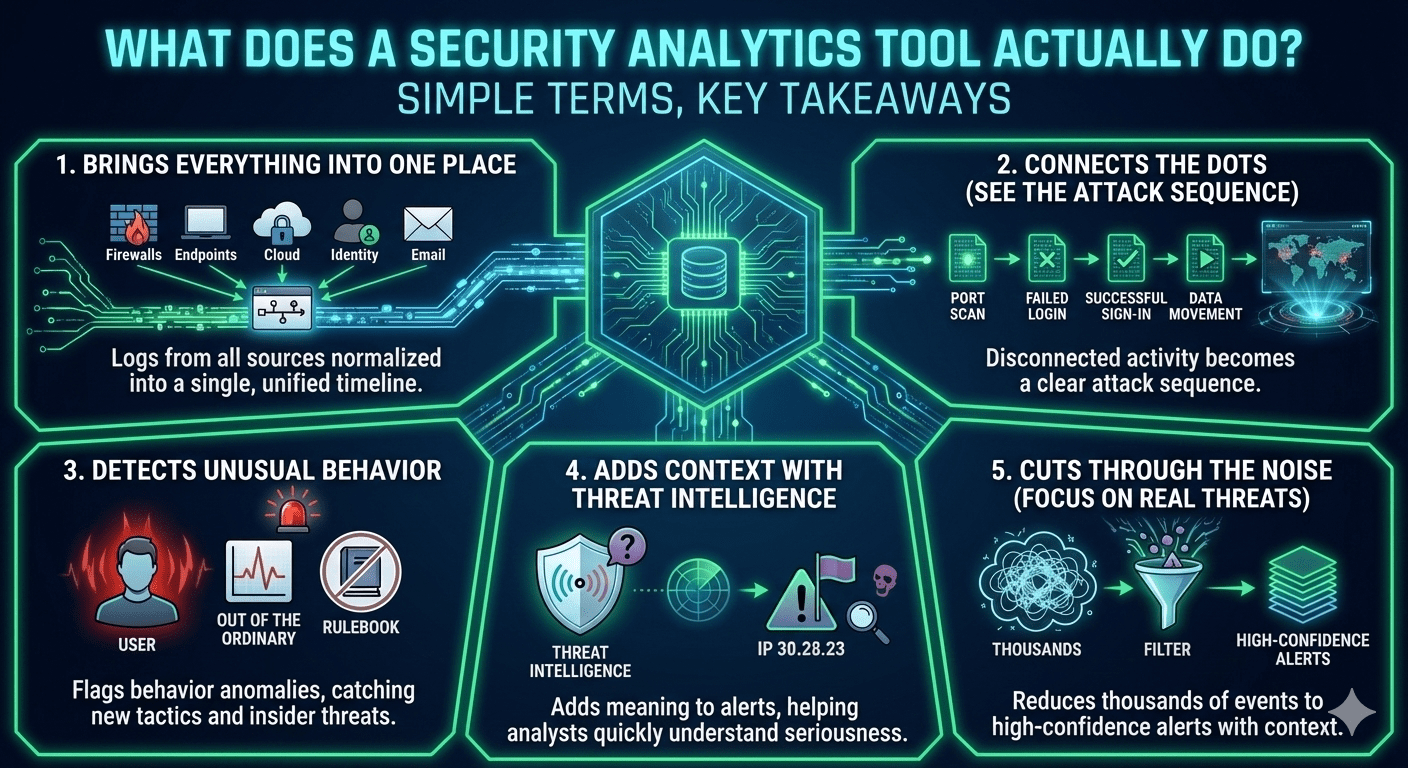

Security analytics exist for one simple reason: your security tools generate massive volumes of data—often millions of events per day, but only a small fraction (typically 1-5%) represents genuine threats requiring investigation.

Without analytics, SOC analysts jump between multiple consoles, spend far too long investigating alerts, and still miss attacks that only make sense when events are viewed together.

Analytics brings everything into one place. Logs from firewalls, endpoints, cloud platforms, identity systems, and email tools are normalized and tied into a single timeline. What would otherwise look like harmless, disconnected activity suddenly becomes a clear attack sequence.

It also connects the dots. A port scan, a few failed logins, a successful sign-in from an unusual location, and later data movement might not raise alarms on their own. Together, they tell a very different story and analytics makes that story visible.

Instead of relying only on fixed rules, analytics looks for behavior that’s out of the ordinary. When a user suddenly acts in ways they never have before, it gets flagged—even if the attack doesn’t match a known pattern. This is how teams catch new tactics and insider threats that traditional tools often miss.

Context matters too. Threat intelligence adds meaning to alerts by showing whether an IP, file, or behavior is linked to active attack campaigns. That context helps analysts quickly understand what they’re dealing with and how serious it is.

Most importantly, analytics cuts through the noise. Thousands of raw events are reduced to a manageable set of high-confidence alerts, letting analysts focus on real threats instead of false positives. And when alerts arrive, they come with built-in context—who was involved, what changed, and why it matters—so decisions take minutes, not hours.

Least-privilege access means giving users and systems only the access they truly need—and being able to prove it’s working. This matters because many breaches begin with stolen or misused privileged credentials. When access accumulates unchecked, risk grows with it.

Start by measuring the overall level of access in your environment. Permission audits often reveal users with more access than their roles require or permissions they haven’t used in months.

Dormant accounts, especially former employee or service accounts, are another major risk that quietly increases the potential blast radius of a breach.

The way access is requested and approved provides important signals. If approvals take too long, teams look for workarounds. If almost nothing is rejected, access reviews may be little more than rubber stamps.

Time-limited access is a strong indicator of maturity—temporary privileges should expire automatically instead of turning into permanent admin rights.

User behavior shows whether controls are effective. Fewer privilege escalation attempts, reduced lateral movement, and fewer exposed credentials indicate that least-privilege controls are working as intended.

Strong programs balance protection with usability. Regular access reviews, rapid removal of unnecessary permissions, and just-in-time access for administrators ensure controls exist in practice—not just on paper.

As excessive access and dormant accounts decline, and incidents tied to permissions drop, you can be confident that least privilege is genuinely reducing risk.

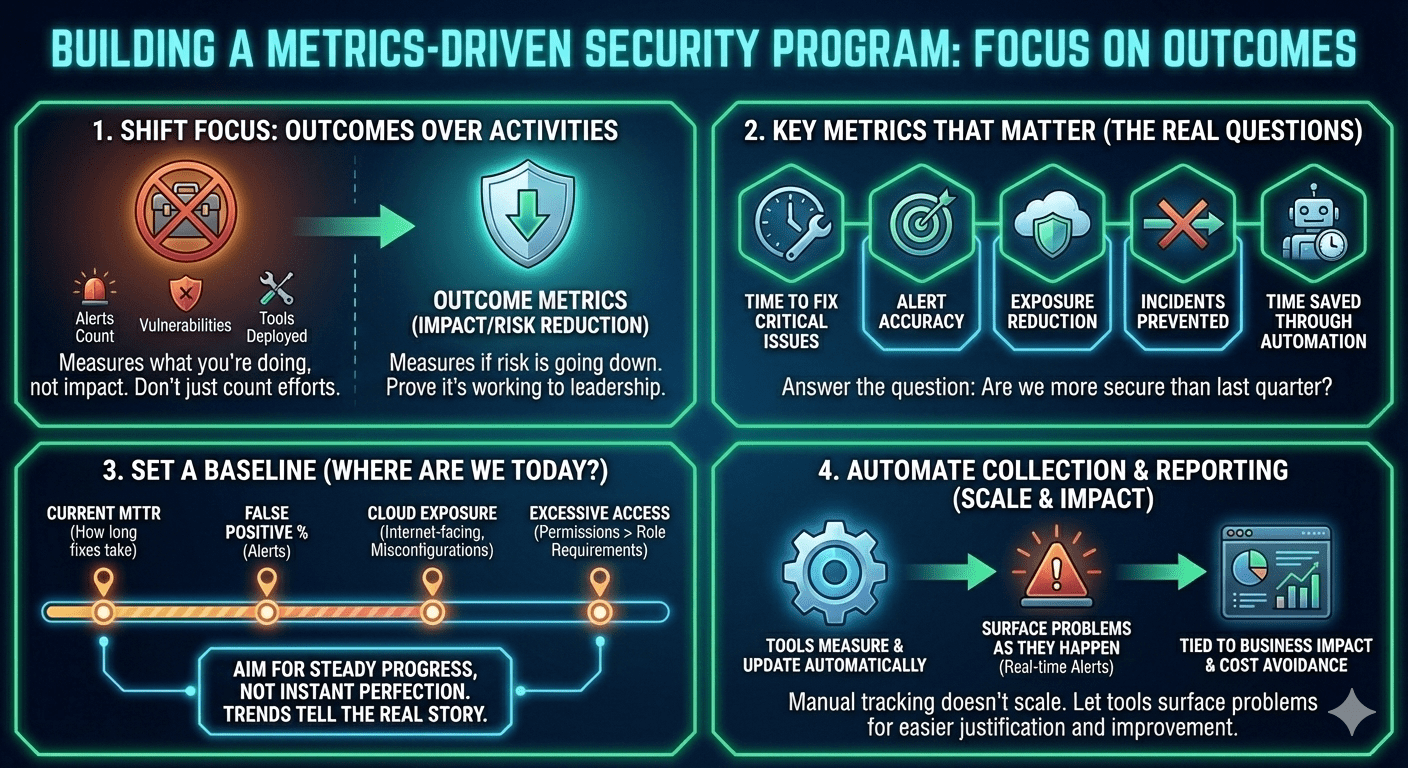

Moving from data collection to data-driven security requires focusing on outcomes instead of activities. The goal isn't maximizing metrics collected—it's measuring what proves you're reducing risk and can demonstrate that progress to leadership.

Start with outcomes, not vanity metrics. Counting alerts, vulnerabilities, or tools deployed shows effort, not impact. These are activity metrics—they measure what you're doing, not whether it's working. What actually matters is whether risk is going down.

Metrics like time to fix critical issues, alert accuracy, exposure reduction, incidents prevented, and time saved through automation answer the real question executives care about: are we more Secure.com than last quarter?

Set a baseline before trying to improve anything. Capture where you are today—how long fixes take (current MTTR), what percentage of alerts are false positives, how much exposure exists in the cloud (internet-facing assets, misconfigurations), and how many users have excessive access (permissions beyond role requirements). Aim for steady progress, not instant perfection. Trends over time tell the real story.

Automate metrics collection and reporting. Manual tracking doesn’t scale and quickly becomes outdated. Let your tools measure and update risk indicators automatically, and surface problems as they happen—not months later in a review. When metrics are tied back to business impact and cost avoidance, security becomes easier to justify and easier to improve.

Security teams gather ample amounts of information on their environment but ultimately ask, is this information leading to a more Secure.com environment? The most significant measure is tracking outcomes—whether risk is actually decreasing—rather than simply counting activities. The appropriate measures are response time to remediate problems, size of the attack surface, and continuous tightening of permissions over time.

By measuring the right outcomes, security teams reduce costs while improving threat detection speed. Metrics like Mean Time to Remediate (MTTR) for vulnerabilities, cloud attack surface reduction, and least-privilege effectiveness provide evidence-based decision-making instead of guesswork. When asked by leadership how Secure.com are we? You will now have clear answers rather than guesswork.

Discover how Digital Security Teammates automate Tier 1 investigations, cutting response times by 45-55% while keeping humans in control of every decision.

SIEM collects and correlates security data—SOAR automates the response, and together they transform reactive SOCs into proactive defense operations.

The Jordan Effect proves what lean security teams already know: scaling isn't about hiring more analysts—it's about multiplying what your existing team can accomplish.